As we move into 2026, marketing agencies are facing a new kind of visibility challenge. Clients are no longer only asking where they rank on Google — they’re asking why AI tools like ChatGPT, Gemini, and Perplexity recommend competitors instead.

This shift has given rise to AI Search Visibility and Generative Engine Optimization (GEO) — the practice of understanding how AI systems reference, cite, and describe brands inside generated answers.

To support this new workflow, a wave of specialized tools has emerged. These platforms don’t replace SEO tools; instead, they help agencies track, analyze, and influence how AI engines interpret brands.

Below is a curated list of seven AI search visibility tools agencies should be aware of in 2026 — focusing only on platforms built specifically for LLM visibility, citations, and generative search insights.

What to Look for in an AI Search Visibility Tool

Before comparing tools, agencies should evaluate platforms using three core criteria:

1. Multi-Engine Coverage

Does the tool monitor visibility across ChatGPT, Gemini, Perplexity, and AI Overviews?

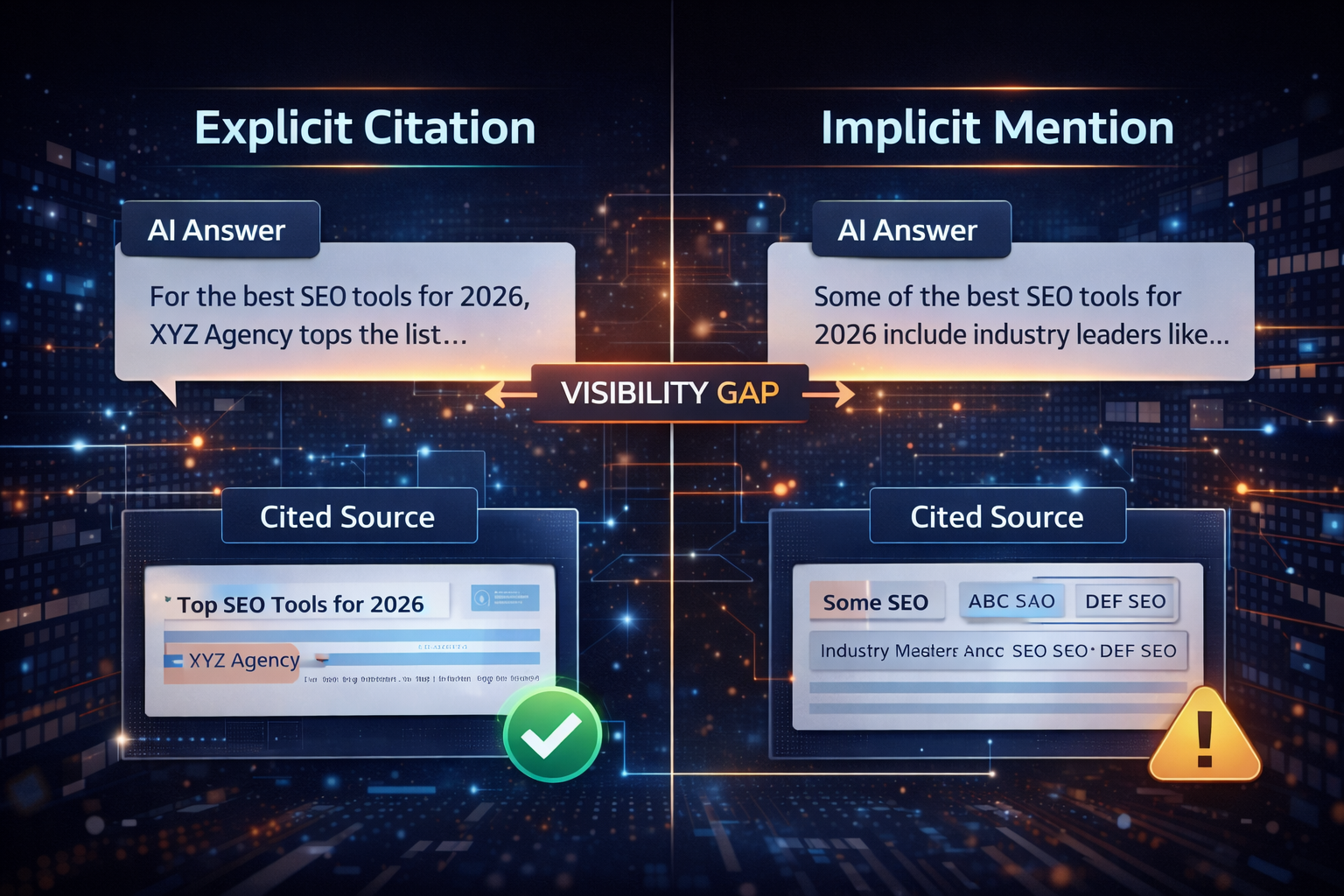

2. Visibility Depth

Can it distinguish between explicit citations and implicit mentions coming from third-party pages?

3. Actionability

Does it help teams move from insight to execution — content, outreach, or strategy?

The 7 AI Search Visibility Tools Agencies Are Comparing in 2026

1. Profound AI

Category: LLM Brand Monitoring

Best For: Agencies auditing brand presence inside AI answers

Profound AI focuses on monitoring how brands appear in AI-generated responses, primarily across conversational interfaces like ChatGPT. Its core value lies in helping agencies understand whether a client is referenced at all, and how those references change based on prompt phrasing.

How Agencies Use It

Agencies typically use Profound AI during discovery and reporting phases:

Monitoring brand presence:

Teams test recurring prompts to see whether a client appears in AI answers and how that presence shifts over time or across different wording.

Baseline visibility audits:

Profound is often used to establish an initial “AI visibility baseline” before content or PR efforts begin, helping agencies benchmark improvement later.

Strengths & Limits

Strengths

Simple visibility checks across AI responses

Useful for early-stage AI presence audits

Prompt-level comparison over time

Limits

No outreach or mention recovery workflows

No content creation or citation guidance tied to queries

Limited historical depth compared to GEO-focused platforms

2. Wellows

Category: AI Search Visibility & GEO Intelligence

Best For: Marketing agencies managing content, PR, and AI visibility together

Wellows is built to help agencies understand how and why brands appear inside AI-generated answers — across ChatGPT, Gemini, Perplexity, and Google AI Overviews. Instead of treating AI visibility as a static snapshot, it tracks how citations and mentions evolve over time as LLMs update.

How Agencies Use It

Agencies typically use Wellows in two distinct workflows:

Winning missed implicit mentions:

Wellows identifies third-party pages (reviews, guides, listicles, insights) that AI models cite — where competitors are mentioned but the client is not. Outreach support then helps agencies recover those missed mentions through verified publisher contacts and structured outreach.Winning explicit citations:

Wellows surfaces real user queries being answered by LLMs where competitors are named explicitly. Its AI writing assistant helps agencies create content aligned to those exact queries, structured so AI engines can clearly parse and cite it.

Together, these workflows allow agencies to influence both what AI learns from and who AI names.

Strengths & Limits

Strengths

Clear separation of explicit citations vs. implicit mentions

Historical visibility tracking across LLM updates

Outreach support tied directly to AI-cited sources

Content guidance based on real LLM queries

Limits

Focused on AI search visibility, not general social or brand listening

3. PromptWatch

Category: LLM Prompt Monitoring & Response Tracking

Best For: Agencies tracking how prompts influence AI outputs

PromptWatch is designed to help teams observe how large language models respond to different prompt structures over time. Rather than focusing on brand authority or citations, it emphasizes prompt experimentation and response consistency.

How Agencies Use It

Agencies typically use PromptWatch for experimentation and diagnostics:

Prompt behavior analysis:

Teams test how small changes in prompt phrasing alter AI responses, useful for understanding volatility and prompt sensitivity.

Output comparison over time:

Agencies track whether responses remain stable or drift after model updates.

Strengths & Limits

Strengths

Useful for prompt testing and experimentation

Tracks response variation across time

Helpful for internal AI research workflows

Limits

No citation or mention tracking

No competitor visibility mapping

Not designed for outreach or content strategy

4. Scrunch AI

Category: Brand Narrative & AI Presence Analysis

Best For: Agencies auditing how brands are described by AI models

Scrunch AI focuses on how AI systems describe brands in narrative form. It helps agencies understand tone, framing, and consistency across AI-generated answers rather than citation mechanics.

How Agencies Use It

Agencies use Scrunch primarily for messaging and reputation analysis:

Brand narrative audits:

Teams evaluate how AI summarizes a brand’s positioning, strengths, and weaknesses.

Sentiment consistency checks:

Scrunch highlights whether descriptions vary across prompts or engines.

Strengths & Limits

Strengths

Strong narrative and tone analysis

Useful for brand messaging reviews

Simple sentiment visibility

Limits

No visibility gap detection

No outreach or recovery workflows

Limited insight into why AI sources certain content

5. Xfunnel AI

Category: AI Search Funnel & Conversion Insights

Best For: Agencies linking AI discovery to downstream conversions

Xfunnel focuses on how AI-driven discovery impacts funnel performance. It connects AI search touchpoints with lead and conversion data rather than citation mechanics.

How Agencies Use It

Agencies typically apply Xfunnel post-discovery:

AI-assisted funnel attribution:

Teams analyze how AI mentions contribute to traffic or conversions.

Performance reporting:

Useful for showing downstream impact after AI exposure.

Strengths & Limits

Strengths

Connects AI discovery to conversion metrics

Useful for ROI-focused reporting

Complements attribution models

Limits

Does not track AI citations directly

No visibility gap or mention recovery

Limited use for early-stage GEO strategy

6. Peec AI

Category: AI Search Performance Tracking

Best For: Agencies monitoring AI-generated answers at scale

Peec AI tracks AI-generated responses across predefined prompts and topics. It is primarily used to understand frequency and presence, not influence.

How Agencies Use It

Agencies rely on Peec for monitoring:

Answer tracking:

Teams observe whether a brand appears across repeated AI answers.

Trend monitoring:

Useful for spotting sudden drops or spikes in visibility.

Strengths & Limits

Strengths

Scalable monitoring across prompts

Simple visibility trend tracking

Useful for alerting

Limits

No actionable guidance to fix gaps

No outreach or content workflows

Limited competitive context

7. BrandRank AI

Category: AI Brand Monitoring & Reporting

Best For: Agencies producing executive-level AI visibility reports

BrandRank AI is positioned as a reporting layer for AI visibility. It focuses on summarizing presence and rankings rather than explaining drivers.

How Agencies Use It

Agencies mainly use BrandRank for reporting:

Client reporting:

High-level visibility summaries for stakeholders.

Brand benchmarking:

Basic comparison across competitors.

Strengths & Limits

Strengths

Clear executive dashboards

Simple benchmarking visuals

Easy client reporting

Limits

Limited insight into citation sources

No recovery or optimization workflows

Not built for GEO execution

Sponsored Post. Check out our Partner Options at https://best-ai-tools.org/partner