The AI landscape is shifting towards efficiency, specialization, and regulation as the era of simply scaling models reaches its limits. Discover how this transformation will impact businesses and society, focusing on intelligent reasoning systems and domain-specific solutions. Take action now by exploring AI learning resources to equip your workforce with the skills needed for this new era.

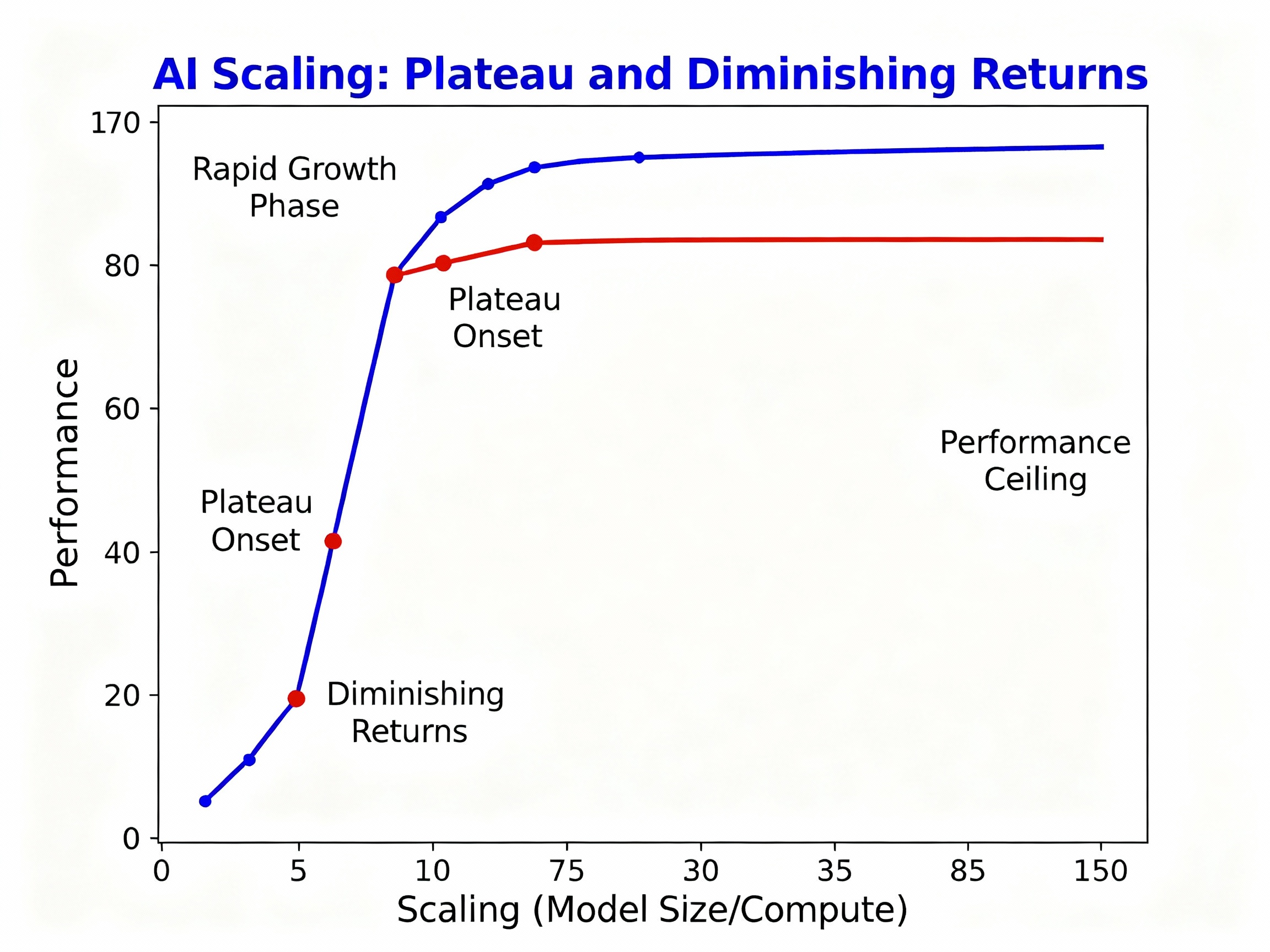

The AI Scaling Plateau: Has 'Bigger is Better' Reached its Limit?

Is the relentless pursuit of bigger and better Large Language Models (LLMs) finally hitting a wall? For years, the mantra has been simple: scale up the model size, feed it more data, and watch performance soar. But now, a growing chorus of voices suggests that this approach is reaching its limits, ushering in a new era of AI development.

Diminishing Returns in AI Training: Analyzing the Plateau

The prevailing industry consensus is that traditional LLM scaling is facing diminishing returns. The low-hanging fruit has been picked, and each additional parameter or training data point yields progressively smaller improvements. As Ilya Sutskever, formerly the Chief Scientist at OpenAI and now focused on Safe Superintelligence, bluntly stated, LLM scaling has plateaued, and the internet's data supply is finite. This sentiment echoes concerns about the limitations of simply throwing more resources at the problem. Even the performance of models like GPT-4 has shown signs of plateauing, suggesting that the current architecture may be approaching its inherent capabilities.

Challenges in Scaling: Data Depletion, Compute Costs, and Energy Constraints

The challenges in scaling are multifaceted. Firstly, the availability of high-quality training data is becoming increasingly scarce. As models consume more and more of the readily available text and code on the internet, they are forced to rely on less informative or even synthetic data, leading to diminishing returns. Secondly, the computational costs of training these massive models are escalating rapidly. Training a state-of-the-art LLM can cost millions of dollars, making it accessible only to a handful of well-funded organizations. The costs associated with AI model training are becoming a barrier to entry for smaller players and researchers. Finally, there are growing concerns about the energy consumption of these models. The AI Energy Crisis is real: training and running large AI models requires vast amounts of electricity, contributing to carbon emissions and raising questions about the environmental sustainability of the current AI trajectory.

The End of the Scaling Era?: Rethinking AI Development

This apparent plateau is forcing researchers and developers to rethink their approach to AI development. The focus is shifting from simply scaling up models to developing more efficient architectures, improving training techniques, and exploring alternative data sources. Some are exploring techniques like knowledge distillation, where a smaller, more efficient model is trained to mimic the behavior of a larger one. Others are investigating novel architectures that can achieve better performance with fewer parameters. The era of blindly scaling up LLMs may be coming to an end, replaced by a more nuanced and resource-conscious approach to AI innovation. This shift is driven by a combination of practical limitations and a growing awareness of the environmental and economic costs associated with ever-larger models. The AI News section on our website provides daily updates on the changing AI landscape.

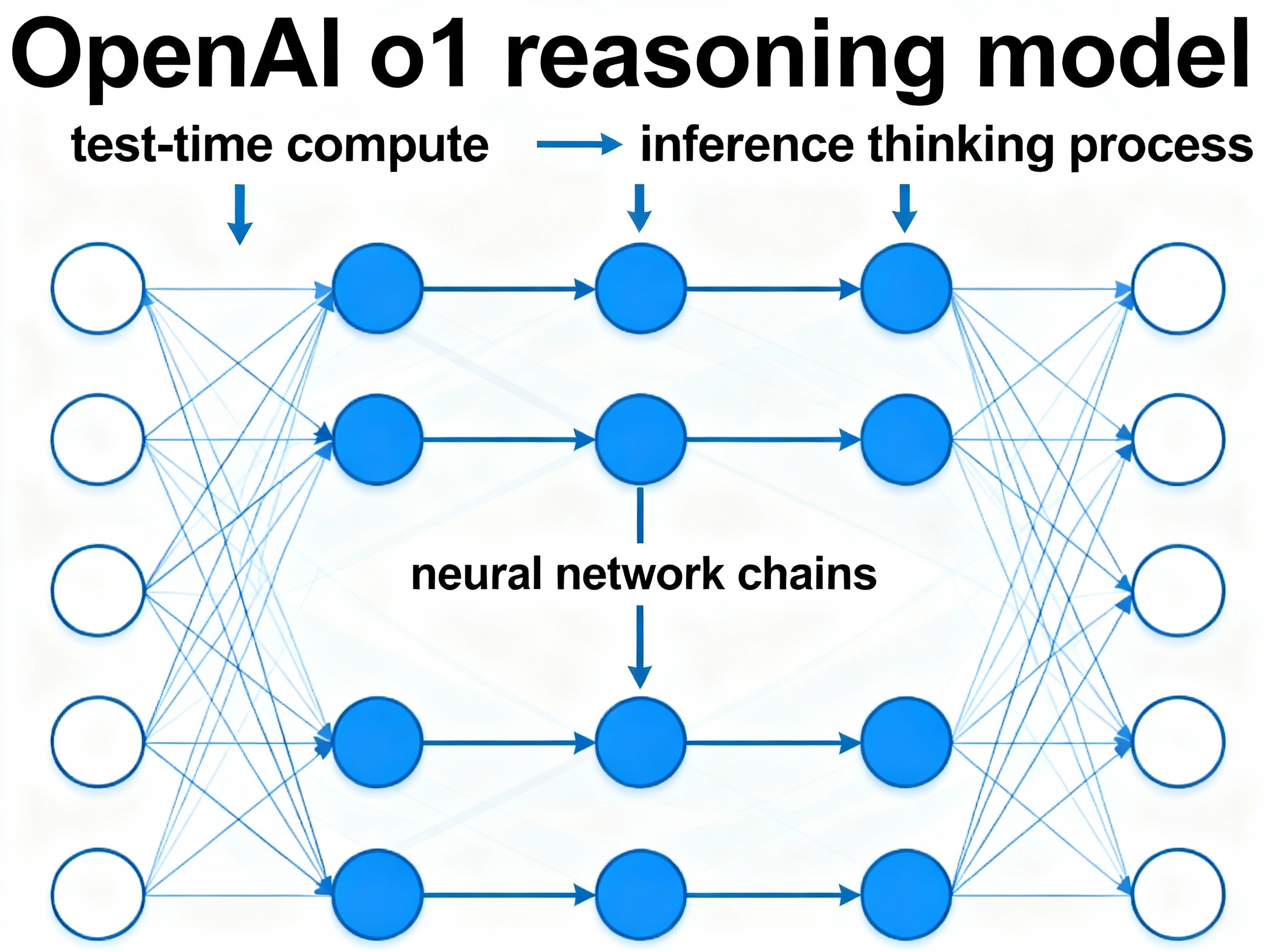

Test-Time Compute: A New Paradigm for AI Innovation

Forget simply bigger models; the future of AI may hinge on smarter computation. OpenAI's recent o1 model has validated 'test-time compute' as a compelling alternative to traditional scaling, marking a potential paradigm shift in AI innovation. Instead of solely focusing on massive models trained on enormous datasets, the focus is now on allocating computational resources intelligently during the question-answering, or inference, phase. This means models can reason more effectively by dynamically allocating more processing power to complex queries.

Inference-Time Reasoning: Smarter, Not Just Bigger

The core idea behind test-time compute is to optimize the inference process. Traditional scaling focuses on increasing the size of the model and the amount of training data. Test-time compute, on the other hand, emphasizes allocating computation intelligently at the moment a question is asked. Think of it like this: instead of giving everyone the same amount of time on a supercomputer, you give more time to those working on the hardest problems. The result? Superior performance, even with smaller models, because the model can focus its computational power where it's needed most. This approach allows for more nuanced and accurate responses, especially when dealing with complex or ambiguous prompts. This is particularly relevant, as demonstrated by tools like ChatGPT, as users are increasingly leveraging AI for sophisticated tasks.

A New Scaling Law and Shifting Investments

The implications of test-time compute are far-reaching. Microsoft CEO Satya Nadella has commented on the emergence of a new scaling law, hinting that raw model size isn't the only path forward. This shift is also reflected in venture capital, with investment increasingly flowing into inference chips rather than solely focusing on training infrastructure. Firms like Sequoia Capital are betting big on companies that can optimize AI inference, recognizing that efficient deployment is just as crucial as model development. It’s not just OpenAI; other leading AI labs, including Anthropic, Google DeepMind, and xAI, are also actively developing their own test-time compute variants, signaling a broad industry consensus on its potential. You can stay up to date on the latest developments in AI from all these companies on our AI News page.

Test-Time Compute vs. Traditional Scaling: Performance and Premium Positioning

While details on the exact architecture of OpenAI's o1 model remain scarce, its pricing strategy speaks volumes. The o1 model commands a premium, reflecting the value of its enhanced reasoning capabilities. OpenAI also offers o1-pro, designed for reasoning-intensive workflows. This premium positioning underscores the economic viability of test-time compute as a business model. As Noam Brown, an OpenAI researcher, articulated, the focus is on building AI that can think smarter, not just process more data. This approach is especially relevant in edge computing, where resources are limited, and test-time optimization becomes paramount. By optimizing the computational process during inference, the o1 model represents a significant step towards more efficient and powerful AI systems.

Test-time compute represents a crucial step toward more efficient AI, setting the stage for a future where AI inference is optimized for both performance and resource utilization. As edge computing becomes more prevalent, this optimization will become even more critical.

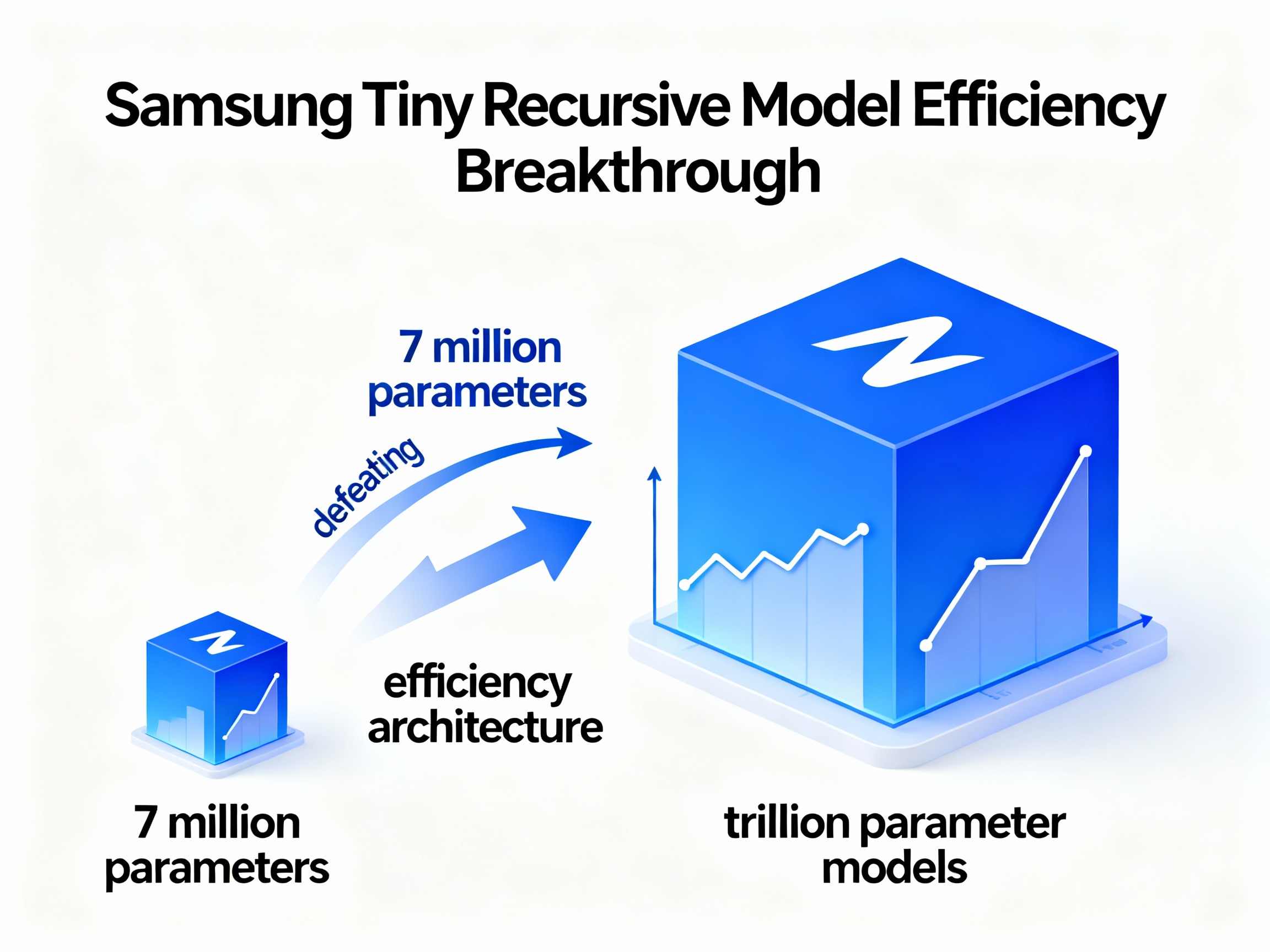

Samsung's Tiny Recursive Model (TRM): Challenging the Scaling Paradigm

In a world obsessed with bigger models, Samsung is turning heads with something surprisingly small. Their new Tiny Recursive Model (TRM), boasting a mere 7 million parameters, is not just cute – it's shockingly effective, even outperforming models with trillions of parameters on complex reasoning tasks.

Smarter, Not Bigger: TRM's Architectural Innovation

For years, the prevailing wisdom in AI has been

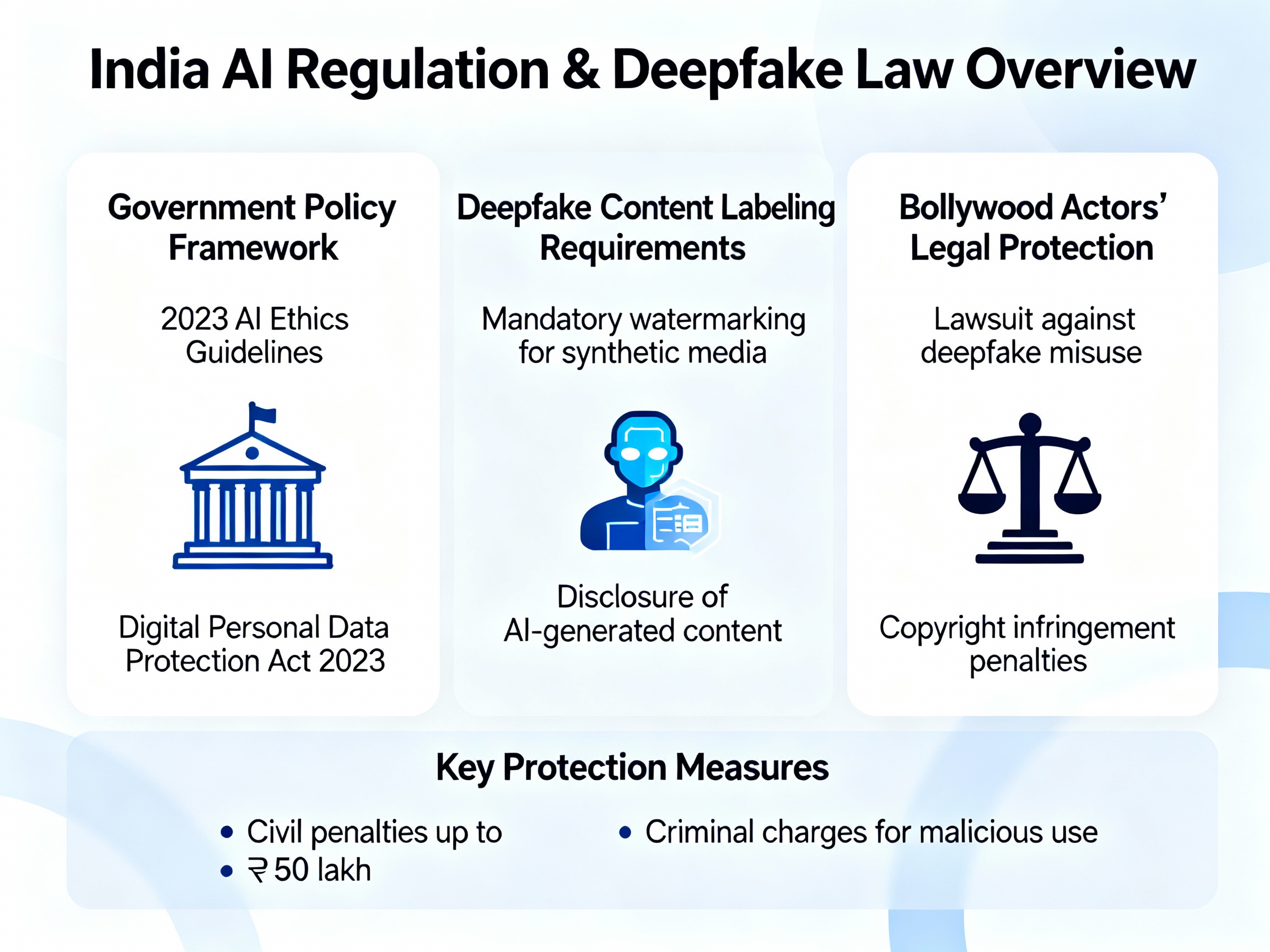

India's AI Content Regulation Framework: A Global Template?

Could India's approach to AI content regulation become a blueprint for the rest of the world? The nation is actively exploring a framework that encompasses AI content labeling, robust deepfake detection mechanisms, and clear platform accountability, reflecting a proactive stance towards managing AI's impact on society.

Mandatory Labeling and Platform Responsibility

At the heart of India's proposed framework is the mandatory labeling of AI-generated content, particularly on social media platforms. Think of it like a digital "ingredients list" for content, informing users about the origin and nature of what they're consuming. This push for transparency aims to empower users to critically evaluate the information they encounter and mitigate the spread of misinformation. Significant Social Media Intermediaries (SSMIs) will likely bear much of the responsibility for implementing these labeling systems. This is related to Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules that are already in place. This mirrors the EU's approach, outlined in the AI News section of our site, which emphasizes platform responsibility in the age of AI.

Bollywood's Influence and Judicial Intervention

Several factors have accelerated the regulatory pressure in India. One notable catalyst is the lawsuit filed by Bollywood actors, including Aishwarya Rai Bachchan and Abhishek Bachchan, against Google and YouTube. This legal action highlights the misuse of AI to create deepfakes that infringe upon their 'personality rights'. Judicial intervention further underscores the urgency of addressing AI misuse, with courts actively protecting individuals from AI-driven exploitation.

Defining and Combating Deepfakes

India's regulatory efforts also focus on defining 'synthetically generated information' to effectively address the proliferation of deepfakes. This involves establishing clear guidelines for identifying and flagging manipulated content. Consider the challenge of discerning a real video from one created using Runway, an AI tool capable of video editing and generation. The Ministry of Electronics and Information Technology (MeitY) is playing a crucial role in shaping these definitions and strategies.

The Ethics of AI-Generated Content

The larger question revolves around the ethics of AI-generated content: How do we balance freedom of expression with the need to protect individuals and society from AI-driven deception?

India's approach signals a comprehensive regulatory strategy that seeks to mitigate risks while fostering responsible innovation. As nations grapple with AI's transformative power, India's framework may serve as a valuable case study, informing global conversations on AI governance and ethical considerations. This is further explored in the AI in Practice section of our learning center.

Market Volatility and AI Valuations: Investor Skepticism Grows

Even as some Asian markets experience rallies fueled by renewed AI enthusiasm, a closer look reveals growing investor skepticism regarding AI valuations and the actual returns these companies are generating. It's a story of contrasts: exuberance on the surface, underscored by increasing unease beneath. For example, the KOSPI and Nikkei 225 might show positive movement based on AI hype, but fundamental concerns persist.

Nvidia's Ascent and Valuation Tensions

Nvidia's stock surge has been a headline grabber, but it also masks underlying tensions about whether its valuation is truly justified. While the company undoubtedly leads in AI chip technology, some analysts question if current investment levels are sustainable, or if they fully align with demonstrated profitability. The staggering demand for data centers, driven by the voracious appetite of AI models, has led to massive capital expenditures. But the question remains: is the return on investment (ROI) truly justifying this unprecedented spending spree? Or are we inflating an AI earnings bubble destined to burst?

Short Bets and Market Correction Signals

Adding fuel to the fire, short bets against companies like Palantir and Nvidia are emerging as potential indicators of a looming market correction. Michael Burry, known for his prescient bets against the housing market in 2008, has reportedly taken a short position against Nvidia. Such moves, while not definitive, send a strong signal that some seasoned investors believe these companies are overvalued and ripe for a downturn. This skepticism isn't confined to individual investors; broader market indicators like the Nasdaq and S&P 500 reflecting volatility further confirm the uncertainty.

Palantir's Q3 Revenue: A Case Study

Consider Palantir's Q3 revenue. While the company may showcase growth, the market's reaction provides crucial insights into investor sentiment. Are investors truly convinced by the numbers, or are they anticipating slower growth or increased competition in the future? Furthermore, enterprise AI adoption rates are a critical metric to watch. If companies aren't rapidly integrating AI solutions into their core operations, the projected growth rates for AI vendors could be severely impacted. These factors combine to paint a more complex and cautious picture of the AI investment landscape, highlighting the need for rigorous analysis beyond the hype. Staying informed through resources like our AI News section can help navigate these turbulent times.

The Future of AI: Efficiency, Specialization, and Regulation

The AI landscape is poised for a dramatic transformation, moving away from the era of unbridled scaling and towards a future defined by efficiency, specialization, and responsible regulation. We are at a critical inflection point, where the focus is shifting from simply making AI bigger to making it smarter and more sustainable.

Efficiency as the New Frontier

For years, the prevailing strategy in AI development has been to scale up models, throwing ever-increasing amounts of data and compute power at the problem. However, this approach is hitting diminishing returns. The next competitive advantage will lie in designing intelligent reasoning systems that can achieve more with less. This includes advancements in algorithms, model architectures, and hardware optimization. Think of it as moving from a gas-guzzling SUV to a sleek, energy-efficient electric car – both can get you from point A to point B, but one does it far more sustainably. For example, innovations like Samsung's Test-Time Compute are emerging as potential efficiency multipliers.

The Rise of Specialized AI

General-purpose AI models like ChatGPT, a versatile language model that can assist with a wide array of tasks from writing to coding, have captured the public's imagination. However, the future belongs to specialized AI – domain-specific solutions tailored to address real-world problems in industries ranging from healthcare to finance. These specialized models can be more accurate, efficient, and cost-effective than their general-purpose counterparts. Consider AI tools designed for medical diagnosis, financial forecasting, or optimizing logistics. These are not just general tools applied to a specific problem, but specifically created and trained to resolve the issues.

Regulation and Accountability

As AI becomes more deeply integrated into our lives, the need for clear ethical guidelines and regulatory frameworks is becoming increasingly urgent. Emerging markets are proactively defining AI accountability standards, recognizing that responsible innovation is essential for building trust and mitigating potential risks. The EU AI Act, for example, sets a precedent for comprehensive AI regulation, influencing global standards. This move aims to ensure AI systems are safe, transparent, and respect fundamental rights. It is a shift towards creating a more equitable and trustworthy AI ecosystem, a topic that is often covered in AI News.

AI in the Enterprise: From Experimentation to Operationalization

Many companies have experimented with AI, launching pilot projects and exploring potential use cases. The next step is to move beyond experimentation and integrate AI into systematized operational frameworks. This requires a strategic approach, focusing on identifying high-impact areas where AI can drive measurable business value. This transition demands robust data infrastructure, clear governance policies, and a workforce equipped with the necessary skills to manage and maintain AI systems. AI investment strategies must also adapt, shifting from enthusiasm-driven valuations to a more ROI-focused approach.

The AI skills gap remains a significant challenge, hindering widespread adoption. Preparing the workforce for the future requires a concerted effort to provide training and education in areas such as machine learning, data science, and AI ethics. Educational platforms are beginning to offer specialized AI learning resources.

In conclusion, the future of AI is not just about bigger models and more data. It's about smarter algorithms, specialized solutions, responsible regulation, and a strategic approach to enterprise adoption. By embracing these principles, we can unlock the full potential of AI to drive innovation, improve lives, and create a more sustainable future.

Keywords: AI scaling limits, test-time compute, AI regulation, AI valuations, Samsung TRM, OpenAI o1, LLM scaling plateau, AI investment, deepfakes, AI ethics, recursive AI, AI infrastructure, India AI regulation, AI market correction, artificial intelligence

Hashtags: #AI #ArtificialIntelligence #MachineLearning #DeepLearning #AIScaling

For more AI insights and tool reviews, visit our website https://best-ai-tools.org, and follow us on our social media channels!

Website: https://best-ai-tools.org

X (Twitter): https://x.com/bitautor36935

Instagram: https://www.instagram.com/bestaitoolsorg

Telegram: https://t.me/BestAIToolsCommunity

Medium: https://medium.com/@bitautor.de

Spotify: https://creators.spotify.com/pod/profile/bestaitools

Facebook: https://www.facebook.com/profile.php?id=61577063078524

YouTube: https://www.youtube.com/@BitAutor