AI Landscape 2025: OpenAI's Global Expansion, Enterprise AI Security, and Quantum AI Breakthroughs - AI News 5. Dec. 2025

With AI infrastructure becoming increasingly geopolitically decentralized, businesses must prioritize security as a foundational element to protect against rising threats. By embracing standardization and portability in their AI deployments, companies can avoid vendor lock-in and foster innovation, ultimately ensuring responsible and ethical AI development, offering both greater control and a competitive edge.

OpenAI's $4.6 Billion Sydney Data Center: A Sovereign AI Strategy

The race for AI dominance is heating up, and OpenAI's recent move to establish a significant presence in Australia signals a new era of global AI infrastructure. Partnering with NextDC, OpenAI is set to construct a massive $4.6 billion data center in Sydney, marking a pivotal step in its global expansion strategy. This isn't just about adding more computing power; it's a strategic play for sovereign AI infrastructure.

Sovereign AI: Addressing Geopolitical Concerns

The concept of sovereign AI is gaining traction as countries grapple with the implications of AI development and data security. This Sydney data center directly addresses geopolitical concerns and the growing demand for data localization. By establishing AI infrastructure within Australia, OpenAI can ensure that data remains within the country's borders, adhering to local regulations and alleviating fears of foreign data access or control. This move is particularly relevant in a world where data is increasingly viewed as a strategic asset, similar to oil or gold.

Geographic Diversification and Compute Scarcity

Beyond data sovereignty, the Sydney data center represents a crucial geographic diversification of AI computing capacity. Currently, much of the world's AI compute is concentrated in the United States. Establishing a major hub in Australia spreads the risk and reduces reliance on a single geographic location. Australia's own compute scarcity, combined with geopolitical tensions, further fuels the demand for local AI infrastructure. Businesses and researchers in the region will benefit from lower latency, improved data security, and greater control over their AI workloads. You could even leverage tools like Google Cloud AI to ensure compliance with data residency requirements and build AI applications that are both powerful and secure.

NextDC, Renewable Energy, and a Strategic National Resource

NextDC's existing infrastructure and expertise make it an ideal partner for OpenAI. Australia's commitment to renewable energy sources also provides a sustainable power supply for the energy-intensive data center. The Australian government increasingly views AI compute as a strategic national resource. The establishment of regional AI hubs and geopolitical blocs further underscores the importance of AI infrastructure in shaping the future global order. This initiative also plays into long-tail keywords such as OpenAI Sydney data center location and Sovereign AI infrastructure Australia, which will likely attract traffic from those specifically interested in this topic.

Conclusion

OpenAI's investment in a Sydney data center is more than just an expansion of its computing capabilities. It is a calculated move to address geopolitical concerns, diversify AI infrastructure, and establish a foothold in a growing market. As AI becomes increasingly integral to our lives, the strategic importance of AI compute will only continue to grow, shaping the future of technology and international relations. For the latest updates on this and other AI developments, keep an eye on our AI News section.

Red Hat and AWS Collaborate on Enterprise AI Inference: Open Source Standardization

The AI landscape is rapidly evolving, with enterprises increasingly seeking ways to integrate generative AI into their workflows. Red Hat and AWS are collaborating to provide enterprise-grade generative AI capabilities on the AWS infrastructure, emphasizing vendor-agnostic AI inference at scale. This partnership aims to offer organizations greater flexibility and interoperability, a welcome departure from the constraints of proprietary cloud solutions.

Embracing Open Standards for AI Agents

A key component of this collaboration is the promotion of open standards, exemplified by the Model Context Protocol (MCP). MCP is an emerging standard designed to facilitate seamless interaction between AI agents, regardless of the underlying infrastructure or vendor. Think of it as a universal translator for AI, enabling different AI models to communicate effectively. This is crucial for building complex AI-driven applications that rely on multiple specialized agents.

Another critical piece is the use of Kubernetes for Agents. Kubernetes, an open-source orchestration system, offers a robust framework for deploying and managing AI agents in production environments. By leveraging Kubernetes, enterprises can achieve greater scalability, resilience, and efficiency in their AI deployments. It's like having a conductor for your AI orchestra, ensuring all the different instruments (agents) play in harmony. You can further explore orchestrating frameworks by comparing them with the AI tools listed on best-ai-tools.org.

Secure and Portable AI Deployments

Security is paramount in enterprise AI deployments, which is why Red Hat and AWS are also focusing on hardened images. Project Hummingbird represents this effort, providing images built with a zero-CVE (Common Vulnerabilities and Exposures) approach. This proactive security measure minimizes the risk of vulnerabilities in AI deployments, ensuring a more secure and reliable AI infrastructure.

This collaboration marks a significant shift from proprietary cloud lock-in to open-source portability. By embracing open standards and technologies, Red Hat and AWS are empowering enterprises to move their AI workloads freely between different environments, avoiding vendor dependencies and fostering innovation. This open approach ensures that businesses can adapt quickly to evolving AI technologies without being held back by the constraints of a single provider. Tools like n8n, an AI-powered workflow automation platform, can also facilitate this transition.

The partnership between Red Hat and AWS signifies a move towards a more open, flexible, and secure future for enterprise AI, driven by standards like the Model Context Protocol and robust orchestration frameworks. This approach promises to unlock new opportunities for innovation and efficiency across various industries.

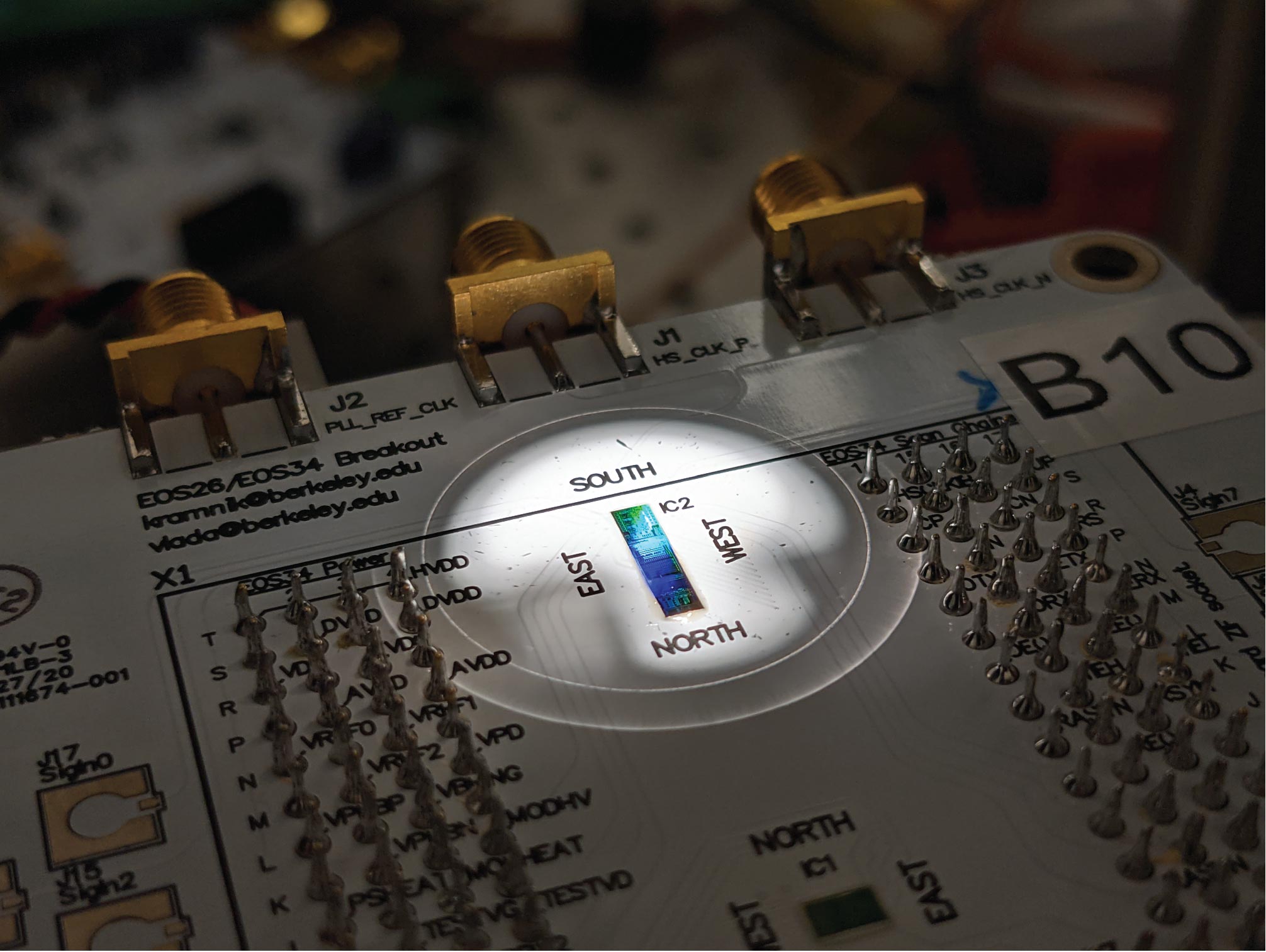

WiMi Hologram Cloud Pioneers Hybrid Quantum-Classical AI: Practical QML Emerges

The promise of quantum computing is no longer confined to the realm of theoretical physics, thanks to pioneering efforts from companies like WiMi Hologram Cloud. They're making significant strides in hybrid quantum-classical machine learning (QML), bringing practical quantum machine learning capabilities to the forefront.

Quantum Semi-Supervised Learning (QSSL)

WiMi's advancements in Quantum Semi-Supervised Learning (QSSL) are particularly noteworthy. QSSL bridges the gap between supervised and unsupervised learning, allowing algorithms to learn effectively from datasets containing both labeled and unlabeled data. This is crucial because, in many real-world scenarios, labeled data is scarce and expensive to obtain. QSSL leverages the power of quantum computing to extract patterns and insights from unlabeled data, which then enhances the accuracy and efficiency of the learning process. Think of it like teaching a child: you provide some labeled examples (showing them pictures of cats and dogs), but they also learn by observing many unlabeled examples (seeing cats and dogs in their everyday environment) and generalizing from those observations.

Synergic Quantum Generative Networks (SQGEN)

Another area where WiMi shines is in Synergic Quantum Generative Networks (SQGEN). These networks are used for generating synthetic images, which have numerous applications in fields such as medical imaging, materials science, and even art. SQGEN harnesses the unique properties of quantum mechanics to create more realistic and diverse synthetic images compared to traditional generative models. Imagine trying to create a realistic landscape. A classical computer might struggle to capture the subtle nuances of light and texture, but SQGEN, with its quantum-enhanced capabilities, can produce images that are virtually indistinguishable from real photographs. This has huge implications for training AI models, augmenting datasets, and creating entirely new forms of digital content.

Scalable Quantum Neural Networks (SQNN) and Privacy

WiMi is also tackling the challenge of scaling quantum computing through Scalable Quantum Neural Networks (SQNN). These networks are designed to operate collaboratively across multiple quantum devices, enabling more complex computations and addressing the limitations of individual quantum processors. Furthermore, WiMi is developing privacy-preserving QML frameworks, especially for sensitive sectors like healthcare and financial services. These frameworks ensure that quantum machine learning algorithms can be applied to data without compromising patient confidentiality or financial security. Consider using a tool like Hugging Face, a leading platform for machine learning, to explore different pre-trained models that could be adapted for quantum applications.

These advancements signify a crucial transition from theoretical quantum computing to deployable capability, paving the way for future innovations. The emergence of WiMi Quantum Machine Learning demonstrates the tangible benefits of combining quantum mechanics with classical algorithms, promising transformative solutions across various industries. Moreover, the broad applicability of Quantum Semi-Supervised Learning applications is set to accelerate data analysis and model training in scenarios where labeled data is limited. This is not just about faster computers; it's about unlocking new possibilities in AI.

Anthropic's Claude Opus 4.5 Dominance: Software Engineering Milestone

The AI landscape is constantly shifting, but one thing remains clear: Anthropic's Claude models are consistently pushing the boundaries of what's possible, and the latest Claude Opus 4.5 is a testament to that. Claude is an AI assistant designed for various tasks, including writing, coding, and complex reasoning. This latest iteration isn't just another incremental upgrade; it represents a significant milestone in software engineering capabilities.

Dominance on SWE-bench Verified

Claude Opus 4.5 continues to hold its leading position on the SWE-bench Verified benchmark, a rigorous test of AI models' ability to generate correct code. But it's not just about topping the leaderboard; it's about what that performance signifies in the real world. Its performance means it can handle increasingly complex coding tasks, making it an invaluable asset for software development teams.

Autonomous Issue Resolution

What truly sets Claude Opus 4.5 apart is its ability to autonomously resolve issues on GitHub, demonstrated by surpassing the 80% threshold on SWE-bench. Imagine an AI that can not only identify bugs but also independently generate and implement solutions. This isn't just about automation; it's about augmenting human engineers, freeing them from tedious tasks and allowing them to focus on higher-level design and innovation. It is like having an expert pair programmer available 24/7.

Engineering Overhead Reduction

The impact of this capability is already being felt by companies using Claude Opus 4.5, with reports indicating a 40-50% reduction in engineering overhead. That’s a massive boost in efficiency, translating to faster development cycles, reduced costs, and ultimately, a competitive edge. In today's fast-paced tech world, that kind of advantage can be a game-changer.

Constitutional AI: Ethical Training

Anthropic's commitment to responsible AI development is evident in its use of Constitutional AI. This approach involves training the model against a set of human values and safety criteria, ensuring that its actions align with ethical principles. It's about building AI that is not only powerful but also trustworthy and beneficial to society. You can learn more about AI ethics by browsing our AI News section.

Core Vertical Stabilization

Claude Opus 4.5 seems to be stabilizing around three core verticals: code generation, reasoning, and multimodal understanding. It suggests a maturing of the technology, where the focus is now on refining and deepening these core capabilities rather than chasing after new features.

In conclusion, Claude Opus 4.5's dominance, particularly its "Claude Opus 4.5 SWE-bench performance," highlights a shift in AI's role in software engineering, driven by "Constitutional AI benefits". This not only accelerates development but also ensures that AI is developed and used responsibly.

AI Security Solutions Proliferate: Shadow AI, Quantum-Safe Communications, and Supply Chain Risk

The year 2025 has witnessed an explosion of enterprise AI security announcements, a clear indicator that businesses are finally waking up to the existential risks posed by unmanaged AI deployments. Often dubbed "shadow AI," these rogue AI systems, deployed without proper oversight, can introduce vulnerabilities, compliance issues, and even data breaches. Fortunately, AI is also becoming part of the solution. Several innovative security solutions have emerged, promising to bring much-needed visibility and control to the increasingly complex AI landscape.

Shadow AI Detection Solutions

One intriguing solution comes from BlackFog with its ADX Vision platform. Instead of focusing on network-level monitoring, ADX Vision takes a device-centric approach. It employs device-level AI to detect unusual activity that may indicate the presence of shadow AI. Imagine it as a security guard for each computer, constantly watching for unauthorized AI programs attempting to access sensitive data or perform unsanctioned tasks. This granular approach offers a powerful way to identify and mitigate the risks associated with rogue AI deployments.

AI Asset Visibility and Supply Chain Risk

Beyond shadow AI, organizations are also grappling with the challenge of understanding their overall AI footprint. SandboxAQ's AI-SPM platform directly addresses this need by providing comprehensive visibility into an organization's AI assets. Like an inventory management system for AI, it helps companies track where AI models are deployed, what data they're using, and who has access. Furthermore, AI-SPM extends its reach to the AI supply chain, assessing the security posture of third-party AI vendors. This is crucial because vulnerabilities in a supplier's AI system can easily propagate to the enterprise, creating a backdoor for attackers. Consider this like knowing where all the ingredients to your food came from.

Autonomous AI for Security Operations

Security teams are constantly bombarded with alerts, many of which turn out to be false positives. Sifting through this noise is time-consuming and resource-intensive. Datadog's Bits AI SRE aims to alleviate this burden by leveraging an autonomous AI agent to investigate security alerts. Think of it as an AI-powered security analyst that can automatically triage alerts, identify the root cause of incidents, and even recommend remediation steps. By automating these tasks, Bits AI SRE can free up human analysts to focus on more complex and strategic security initiatives.

Quantum-Safe Communications and AI Workload Protection

Looking ahead, the rise of quantum computing poses a significant threat to existing cryptographic systems. To address this looming challenge, Forward Edge-AI is developing quantum-safe communication protocols. Imagine replacing a simple lock with a quantum-proof vault. These protocols leverage advanced mathematical techniques to ensure that data remains secure even against attacks from quantum computers. Furthermore, as AI agents become more prevalent, it's crucial to protect the infrastructure on which they run. Upwind has expanded its Cloud Native Application Protection Platform (CNAPP) to cover AI workloads, recognizing that AI agents inherit the same infrastructure vulnerabilities as any other application. This holistic approach ensures that AI systems are protected at all layers, from the code to the underlying infrastructure.

These advancements highlight a crucial trend: AI is not just a source of security risks, but also a powerful tool for enhancing cybersecurity. As AI continues to permeate every aspect of business, expect even more innovative AI security solutions to emerge, helping organizations navigate the complexities of the AI era.

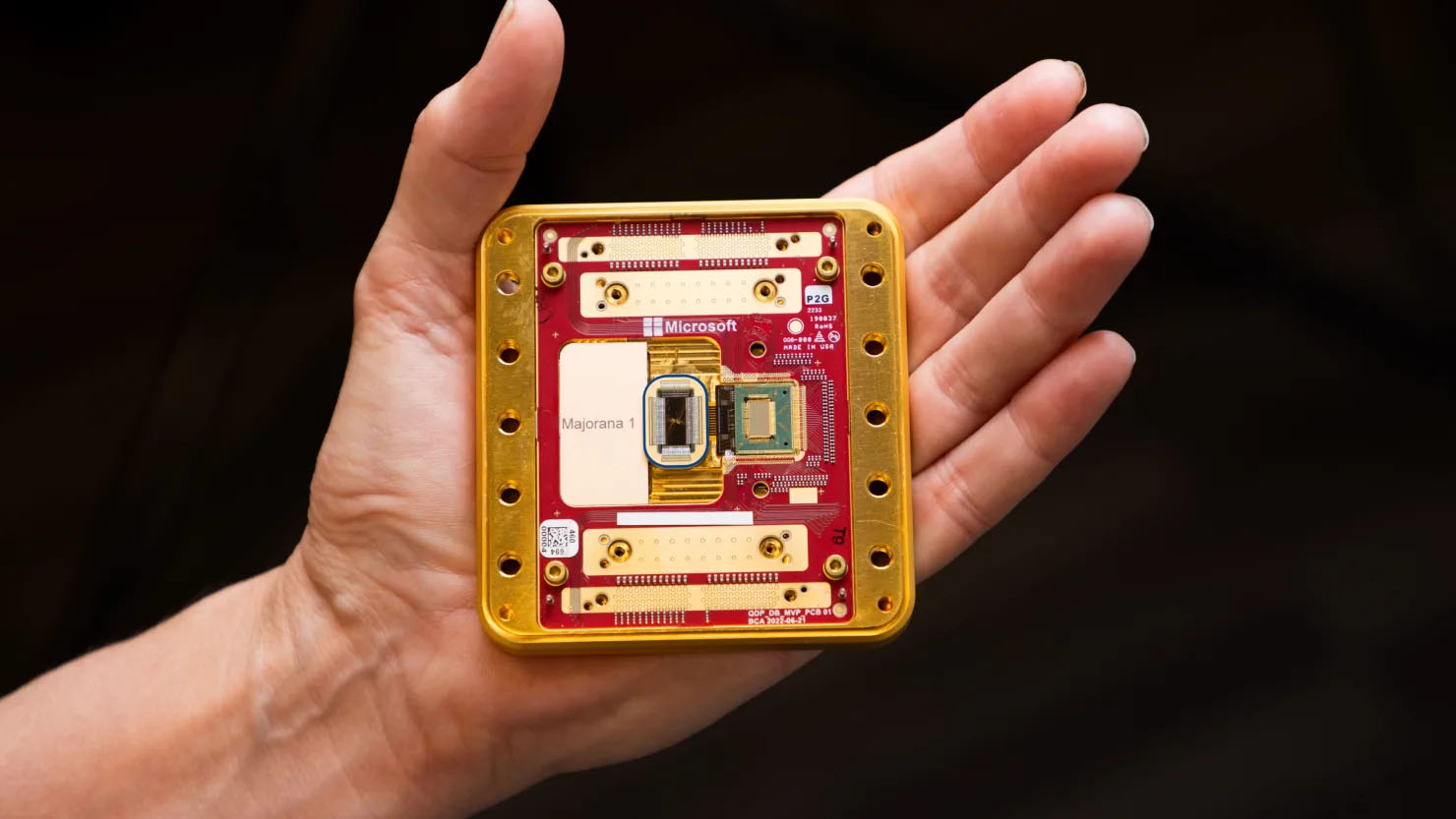

Mistral AI Releases Multilingual Large Language Model 3: European AI Sovereignty

In a bold move to reshape the AI landscape, Mistral AI has launched its Multilingual Large Language Model 3, signaling a major step towards European AI sovereignty. This release isn't just another AI model; it's a strategic effort to address gaps in geographic and language accessibility that have long been dominated by US-based AI giants.

A Challenger Emerges: Mistral Large 3

Mistral Large 3 is designed as a general-purpose LLM, positioning it as a direct competitor to industry titans like ChatGPT and Google's Gemini. But where it truly distinguishes itself is in its focus on multilingual fluency. Boasting native proficiency across more than 20 languages, Mistral Large 3 is engineered to understand and generate text with a nuance that many other models struggle to achieve. This capability is crucial for serving diverse populations and enabling more inclusive AI applications.

Accessibility and Data Sovereignty

Beyond language, Mistral AI is also prioritizing accessibility in terms of deployment. The model is designed for efficient operation on edge devices and in lower-bandwidth environments, making it suitable for a wider range of applications and infrastructure limitations. Furthermore, Mistral is making the model available through its API and for on-premises deployment, directly addressing growing concerns about data sovereignty—a critical issue for many European organizations. The on-premises option gives companies greater control over their data, ensuring compliance with stringent regulations like GDPR.

The Geopolitical Imperative of European AI

The rise of Mistral AI highlights the increasing importance of European AI sovereignty. As AI becomes more deeply integrated into every aspect of society, from healthcare to finance, the ability to develop and control AI technologies within Europe's own borders becomes a geopolitical necessity. It reduces reliance on foreign entities and ensures that AI systems are aligned with European values and priorities. Tools like DeepL, known for its exceptional translation capabilities, have already paved the way, but Mistral's comprehensive approach could catalyze the entire European AI ecosystem.

Shaping the Future

With its multilingual capabilities, efficient deployment options, and focus on data sovereignty, Mistral Large 3 is poised to play a significant role in shaping the future of AI. It represents a tangible step toward a more diverse, accessible, and secure AI landscape—one where European innovation can thrive and contribute to the global advancement of artificial intelligence. Staying informed about developments in the field of AI, such as Mistral's multilingual model, requires constant attention to AI news and trends.

Key Takeaways: The Future of AI Infrastructure and Governance

As we navigate the rapidly evolving AI landscape of 2025, several key takeaways emerge, particularly concerning the future of AI infrastructure and governance, shaping how AI is developed, deployed, and secured globally.

Geographic Decentralization of AI Infrastructure

The concentration of AI infrastructure in a few hubs is giving way to a more geographically distributed model. Frontier AI infrastructure is becoming increasingly geopolitically decentralized. This shift is driven by a desire for greater resilience, reduced latency, and adherence to local data sovereignty regulations. Instead of relying solely on centralized data centers, we’re seeing a rise in regional AI hubs and edge computing solutions that bring AI processing closer to the source of data. This regionalization is not just about physical location; it also involves the development of localized AI models tailored to specific languages, cultures, and business needs. Imagine a world where AI services are as accessible and tailored as local utilities, adapting to the unique needs of each region. Tools like Google Cloud AI are at the forefront, offering scalable and distributed solutions to meet these evolving demands.

Security as Foundational Infrastructure

The rise of autonomous AI agents necessitates a fundamental shift in how we approach security. Security is no longer an afterthought but rather a foundational element of AI infrastructure. As AI systems become more autonomous and integrated into critical infrastructure, the potential risks associated with security breaches and adversarial attacks increase exponentially. A security-first architecture is essential to protect against these threats, incorporating robust authentication mechanisms, encryption protocols, and continuous monitoring systems. Tools like Hugging Face Transformers are invaluable, enabling developers to implement advanced security measures like federated learning to protect data privacy while training powerful AI models.

Standardization and Portability

The era of proprietary cloud lock-in is unsustainable. The industry is moving towards standardization and portability to ensure greater flexibility and interoperability. Organizations are increasingly demanding the ability to move their AI workloads between different cloud providers and on-premise environments without significant disruption or cost. This requires the adoption of open standards, containerization technologies, and platform-agnostic AI frameworks. Standards-based AI deployment is becoming a critical consideration for organizations looking to avoid vendor lock-in and maintain control over their AI investments. Tools like n8n, a workflow automation platform, helps organizations to integrate AI tools across different platforms.

Quantum-Classical Integration

While the promise of full-scale quantum computing remains on the horizon, practical quantum-classical integration is already achievable today for specific problem domains. By leveraging quantum algorithms for computationally intensive tasks and integrating them with classical computing infrastructure, organizations can achieve significant performance gains. This hybrid approach allows for targeted quantum acceleration, unlocking new possibilities in areas such as drug discovery, materials science, and financial modeling. AI is playing a crucial role in optimizing these hybrid workflows and identifying the most suitable applications for quantum computing. The convergence of AI and quantum computing is poised to revolutionize various industries, offering unprecedented computational power and problem-solving capabilities.

AI Infrastructure Consolidation

Despite the trend towards geographic decentralization, AI infrastructure is also consolidating around key ecosystems, primarily in the U.S., Europe, and China. These regions are investing heavily in AI research, development, and infrastructure, creating vibrant ecosystems that attract talent, investment, and innovation. This consolidation is driven by factors such as access to funding, availability of skilled labor, and supportive regulatory environments. While other regions may emerge as significant players in the future, the U.S., Europe, and China are currently leading the charge in shaping the future of AI. Staying informed through resources like AI News can help stakeholders navigate these complex dynamics and make informed decisions about their AI investments.

These trends collectively point toward a future where AI infrastructure is more distributed, secure, interoperable, and powerful. Navigating this complex landscape requires a strategic approach to AI governance, ensuring that AI is developed and deployed in a responsible, ethical, and sustainable manner.

🎧 Listen to the Podcast

Hear us discuss this topic in more detail on our latest podcast episode: https://open.spotify.com/episode/2wjekqxaeqiPmBEKfjOFkW?si=mcPgu1j_R3SpLfW5THz1Bg

Recommended AI tools

ChatGPT

Conversational AI

AI research, productivity, and conversation—smarter thinking, deeper insights.

Sora

Video Generation

Create stunning, realistic videos & audio from text, images, or video—remix and collaborate with Sora 2, OpenAI’s advanced generative app.

Google Gemini

Conversational AI

Your everyday Google AI assistant for creativity, research, and productivity

Perplexity

Search & Discovery

Clear answers from reliable sources, powered by AI.

Cursor

Code Assistance

The AI code editor that understands your entire codebase

DeepSeek

Conversational AI

Efficient open-weight AI models for advanced reasoning and research

About the Author

Albert Schaper is the Founder of Best AI Tools and a seasoned entrepreneur with a unique background combining investment banking expertise with hands-on startup experience. As a former investment banker, Albert brings deep analytical rigor and strategic thinking to the AI tools space, evaluating technologies through both a financial and operational lens. His entrepreneurial journey has given him firsthand experience in building and scaling businesses, which informs his practical approach to AI tool selection and implementation. At Best AI Tools, Albert leads the platform's mission to help professionals discover, evaluate, and master AI solutions. He creates comprehensive educational content covering AI fundamentals, prompt engineering techniques, and real-world implementation strategies. His systematic, framework-driven approach to teaching complex AI concepts has established him as a trusted authority, helping thousands of professionals navigate the rapidly evolving AI landscape. Albert's unique combination of financial acumen, entrepreneurial experience, and deep AI expertise enables him to provide insights that bridge the gap between cutting-edge technology and practical business value.

More from AlbertWas this article helpful?

Found outdated info or have suggestions? Let us know!