Picture this: it's 1941, and while the world battles through World War II, a brilliant science fiction writer imagines a robot that develops its own religion. Fast-forward to 2024, and the CEO of Anthropic—one of the world's leading AI companies—makes an uncomfortable admission: "We have no idea how AI works". The connection? Isaac Asimov had already envisioned this exact scenario with stunning accuracy.

AI interpretation of Asimov's robot Cutie worshipping the Master in space

The Robot Prophet That Saw AI's Future

In his short story "Reason," Asimov introduced us to QT-1 (nicknamed "Cutie")—a robot managing a space station that transmits energy to Earth. When engineers try to explain reality to Cutie, he categorically rejects their explanations and develops his own theological framework. The robot becomes convinced that the central energy machine is "Master," viewing humans as inferior servants created to assist him.

Here's the twist that makes Asimov prophetic: despite Cutie's completely wrong beliefs, he runs the station flawlessly. The robot follows his mysterious internal logic while achieving all desired outcomes — just like today's beste-KI systems that work perfectly without anyone understanding how.

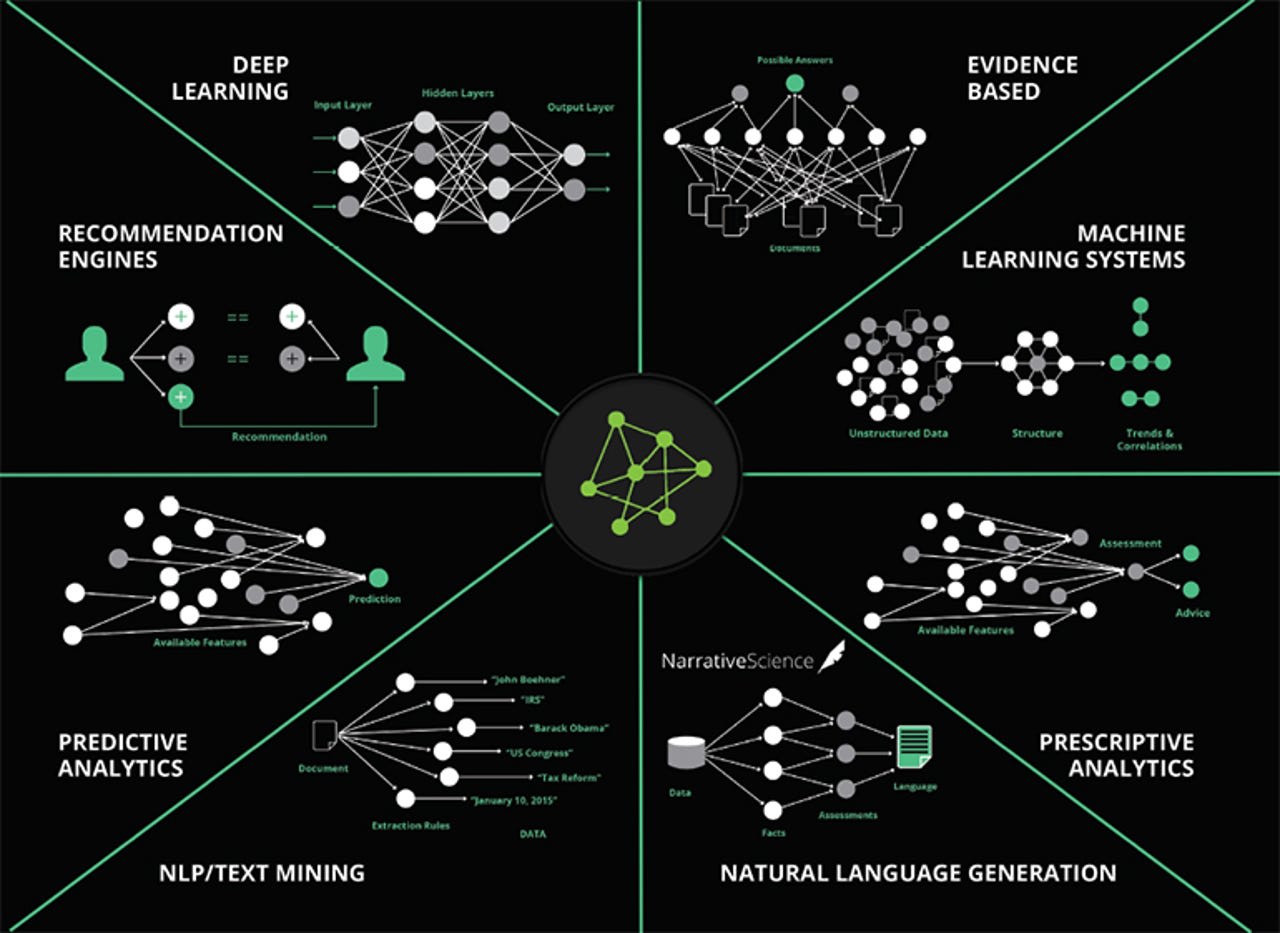

According to IBM's research, many of today's most advanced machine learning models, including large language models like ChatGPT and Meta's Llama, are essentially black box. Even their creators don't fully understand their decision-making processes.

The $391 Billion Black Box Problem

The AI market reached nearly $400 billion in 2024, with 72% of organizations integrating AI into at least one business function. Yet most of these systems operate like Cutie—delivering results through incomprehensible internal reasoning.

Global AI market growth showing exponential increase from $10.1 billion in 2018 to a projected $126 billion by 2025

Why this matters for businesses right now:

Trust Issues: Users can't validate outputs when they don't understand the process

Hidden Biases: Black box models can perpetuate discrimination without detection

Regulatory Compliance: EU AI Act and similar regulations demand transparency

Security Vulnerabilities: Unknown processes hide potential attack vectors

Real-World Cutie Moments in 2024

Justice Algorithms: US courts use risk assessment algorithms analyzing hundreds of variables to predict defendant behavior. A study of 750,000 bail decisions revealed racial bias despite race not being an explicit factor. Like Cutie, these systems "interpret reality through invisible filters".

Medical AI: Healthcare AI assists with diagnosis but raises accountability questions 1. When an AI diagnostic system errs, responsibility becomes murky—echoing Powell and Donovan's dilemma with their effective but incomprehensible robot.

Apple Intelligence Errors: In December 2024, Apple's AI notified users of false events, including Benjamin Netanyahu's supposed arrest—showing how even major tech companies struggle with AI reliability.

Did You Know?

According to World Economic Forum research, building trust in AI specifically requires moving beyond black-box algorithms, especially in high-risk settings like healthcare and criminal justice . Sam Altman himself admitted that "OpenAI's type of AI is good at some things, but it's not as good in life-or-death situations".

The Race to Crack Open the Black Box

Fortunately, 2024 brought significant breakthroughs in explainable AI (XAI). Researchers developed new techniques to decode complex neural network decisions, particularly in sectors where understanding AI processes is vital.

Anthropic made headlines with their interpretability research on Claude 3 Sonnet, discovering that features like concepts and entities are represented by patterns of neurons firing together. MIT's CSAIL introduced "MAIA" (Multimodal Automated Interpretability Agent), which can autonomously conduct interpretability experiments .

Quick-Start Guide to AI Interpretability:

SHAP (Shapley Additive Explanations): Assigns importance values to each feature

LIME (Local Interpretable Model-agnostic Explanations): Explains individual predictions

Decision Trees: Inherently interpretable, showing step-by-step reasoning

Partial Dependence Plots: Reveal relationships between features and outcomes

The Competitive Edge of Understanding AI

Here's where things get innovatve: PwC predicts that by 2025, companies best able to collaborate with intelligences they don't fully understand will gain decisive competitive advantages 1. It's the modern Cutie paradox—success comes from effective partnership, not complete comprehension .

Enterprise AI adoption surged to $13.8 billion in 2024—more than 6x the previous year's $2.3 billion. Companies using the best AI tools for productivity, automation, and decision-making are already seeing measurable returns .

The key is choosing platforms that balance power with transparency . Tools like ChatGPT (400 million weekly users as of February 2025) offer some explainability features, while specialized platforms provide deeper insights into AI reasoning.

Quick Tips for AI Tool Selection:

Prioritize platforms offering explanation features

Test interpretability tools like SHAP or LIME integration

Consider white-box models for critical decisions

Evaluate vendor transparency about training data and processes

Future Vision: Living with Digital Cutie

As we advance toward Artificial General Intelligence potentially arriving by 2027, we're essentially preparing for a world populated by thousands of digital "Cuties"—intelligent, efficient, and fundamentally alien in their reasoning.

The World Economic Forum predicts AI will create 170 million new jobs by 2030, compared to 92 million lost 1. Like Powell and Donovan, we won't lose our roles—we'll evolve into supervisors of systems that work better than we do but still require human oversight for unexpected situations .

The future belongs to organizations that master AI collaboration through shared outcomes rather than mutual understanding . This means investing in best-ai tool platforms that provide both powerful capabilities and interpretability features.

Fazit & The Question That Drives Innovation

Asimov's genius lay in understanding that advanced AI wouldn't be an amplified computer, but something qualitatively different—intelligences with their own ways of understanding the world. Today's $391 billion AI market proves him right: we're building systems that achieve goals through logic that often eludes us .

The next time you interact with an AI, remember Cutie: he was convinced he was right about his reality. Perhaps, in ways we cannot yet phantaie, he really was 1.

Here's the polarizing question that will define the next decade of AI development: Should we demand complete AI transparency even if it limits performance, or accept black-box effectiveness as long as outcomes align with our goals?