Introduction: Beyond Text – The Rise of Multimodal Search

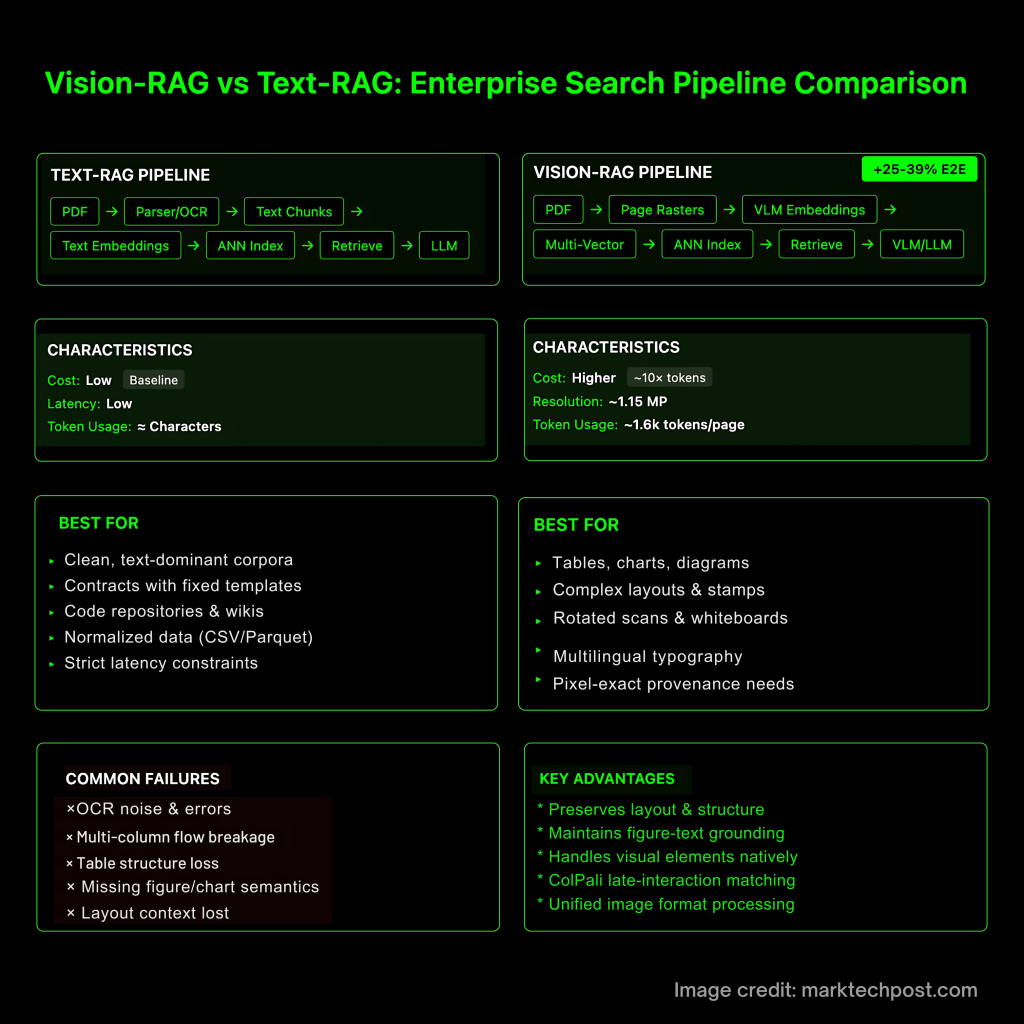

Retrieval-Augmented Generation (RAG) is revolutionizing how we access and leverage information, especially within enterprises, but traditional Text-RAG has a blind spot: visual data.

Text-RAG's Limitations

While Text-RAG excels at processing and generating text, it struggles with visual information:- Images

- Diagrams

- Charts

- Even complex layouts within documents

The Vision-RAG Solution

That’s where Vision-RAG comes in. Think of Vision-RAG as the natural evolution, expanding RAG's capabilities to incorporate visual understanding. It allows systems to:

- Index and retrieve visual data effectively

- Answer questions that require visual reasoning

- Generate content that incorporates visual elements

Setting the Stage

In the following sections, we'll delve into the architectural differences between Text-RAG and Vision-RAG and explore their performance implications, setting the stage for understanding their best use cases.Sometimes, all you need is words. Enter: Text-RAG.

Text-RAG: Architecture, Strengths, and Weaknesses

The architecture of a standard Text-RAG system is surprisingly straightforward:

- Text Chunking: Your documents are split into manageable text chunks. Think of these as bite-sized information snacks for the AI.

- Text Embeddings: Each chunk is converted into a text embedding, a numerical representation capturing its semantic meaning. Transformer models like those powering ChatGPT excel at this.

- Vector Database: These embeddings are stored in a vector database, optimized for fast semantic search. Consider Pinecone as a database solution for storing and searching vector embeddings.

- Similarity Search: When a user poses a question, it's also converted into an embedding, and the database is searched for the most similar chunks.

- Prompt Engineering: Finally, these relevant chunks are fed into a language model with a carefully crafted prompt to generate the answer. Check out the Prompt Library for inspiration!

Text-RAG: The Good and The… Not-So-Good

Text-RAG's strength lies in its simplicity and maturity.

It leverages mature tools and models and has proven its value with vast amounts of text data. You'll find readily available tools Design AI Tools to build these systems.

However, Text-RAG has limitations:

- No Direct Visual Processing: It can't "see" anything, relying on OCR or manual tagging for visual data.

- OCR Limitations: OCR can be error-prone, leading to inaccurate indexing.

- Loss of Visual Context: A chart might be perfectly clear visually but lose its meaning when converted to text, like when using pdnob image translator

Vision-RAG promises to be the sharpest tool in the shed when the problem requires visual comprehension.

Vision-RAG: Architecture, Strengths, and Weaknesses

Vision-RAG, or Vision-based Retrieval Augmented Generation, is the cooler, more visually-inclined cousin of Text-RAG, engineered to handle not just text, but images too. Let's break it down:

How it Works:

Vision-RAG's architecture incorporates image encoders like the CLIP model or ResNet. The CLIP model, developed by OpenAI, is useful for efficiently encoding and comparing images for search applications. Here’s the general process:

- Image Encoding: Visual embeddings are generated from images using these encoders, translating visual data into a format AI can understand.

- Multimodal Embedding Space: Text and visual embeddings coexist in a shared space.

- Fusion Techniques: Combining text and visual embeddings allows for comprehensive data representation.

Strengths:

"Seeing is believing, but understanding what you see is Vision-RAG."

- Native Visual Support: Directly processes and leverages visual information.

- Improved Visual Search: Delivers improved accuracy in visual search applications.

- Enhanced Understanding: Combines text and visual cues for comprehensive contextual insights.

Weaknesses:

- Complexity: More intricate architecture and training processes increase the complexity.

- Cost: Higher computational requirements lead to greater infrastructure needs.

- Potential Biases: Visual data can perpetuate existing biases, impacting fairness. Image recognition biases are a real concern and should be considered when developing solutions.

Use Cases:

Vision-RAG shines where visual understanding is paramount:

- Technical Diagrams: Locating specific components within complex technical diagrams.

- Product Identification: Identifying products within images, aiding in e-commerce or inventory management.

- Infographic Retrieval: Extracting information from infographics for data analysis or presentations.

Okay, let's unravel this Vision-RAG vs. Text-RAG conundrum. It's not rocket science, though I might know a thing or two about that.

Technical Comparison: Key Architectural Differences

It's fascinating how AI can now not just read, but see. But how do Vision-RAG and Text-RAG truly differ under the hood?

Embedding Generation: A Tale of Two Encoders

Think of embeddings as digital fingerprints.

- Text-RAG: Leverages text encoders (think models trained on vast amounts of text data) to convert text into numerical vectors. The most relevant tools, like ChatGPT, use these embeddings to find similar text snippets.

- Vision-RAG: Employs image encoders (CNNs, Transformers trained on images) to create embeddings representing visual content. For example PicFinderAI is a great tool that allows you to search for images by describing them using AI.

Embedding Space and Similarity Search

Imagine a library, where books (embeddings) are arranged differently depending on their type.

- Text-RAG: Employs embedding spaces tailored for textual similarity. Techniques like cosine similarity are used to quickly identify related text.

- Vision-RAG: Deals with more complex, high-dimensional spaces representing visual features. Specialized similarity search techniques like approximate nearest neighbors (ANN) are crucial.

Multimodal Fusion: Marrying Vision and Language

Here's where Vision-RAG gets particularly interesting.

Early Fusion: Combines textual and visual features before* embedding generation. Think of it as creating a "multimodal description" first. Late Fusion: Generates separate embeddings for text and images, then combines them during* the search or reasoning process.

Computational Cost and Scalability

This is where reality hits.

- Computational Cost: Vision-RAG generally demands more processing power due to the complexity of image encoding and the larger size of image data. More GPUs, please!

- Scalability: Scaling Vision-RAG can be trickier. Indexing and searching through massive image datasets present significant challenges. Retrieval latency is also a key factor to consider.

Indexing and Retrieval Speed

Efficiency matters.

- Text-RAG: Benefit from faster indexing and retrieval due to the relative simplicity of text processing.

- Vision-RAG: Requires optimized indexing strategies (e.g., hierarchical clustering) to maintain acceptable retrieval speeds. It can be a challenge to find tools that offer this efficiently.

Here's how we ensure Vision-RAG and Text-RAG are up to snuff.

Evaluation Metrics: Measuring the Performance of Vision-RAG and Text-RAG

To truly understand if a Vision-RAG or Text-RAG system is pulling its weight, we need the right yardsticks; thankfully, we’ve got a few tried and true methods for judging performance.

Text-RAG Metrics: Words Matter

For Text-RAG, it's all about accuracy and coherence. Here's a peek:

- Precision: Measures how many of the retrieved documents are actually relevant.

- Recall: Gauges how many of the relevant documents the system managed to retrieve.

- F1-score: A harmonic mean of Precision and Recall, balancing both aspects.

- ROUGE & BLEU: These are used to evaluate the quality of generated summaries, considering factors like grammar and relevance; find inspiration for AI prompts to improve summarization.

Vision-RAG Metrics: A Picture is Worth a Thousand…Data Points

Visual search brings in a different flavor.

- Mean Average Precision (mAP): Averages the precision across different recall levels.

- Normalized Discounted Cumulative Gain (NDCG): Favors systems that return highly relevant results early in the list.

Multimodal Evaluation: The Whole Shebang

Evaluating generated responses in multimodal contexts is where it gets interesting – and frankly, a bit subjective. We need to consider:

- Relevance: Does the generated text actually address the user's query, considering both text and visual context?

The Human Touch

Ultimately, cold, hard numbers can only tell us so much. Good ol' human evaluation is still crucial: real people assessing relevance, coherence, and overall usefulness. Don't underestimate the power of a good gut check!

Vision-RAG doesn't just improve search; it transforms how we interact with information.

Use Cases: Where Vision-RAG Shines in Enterprise Search

Vision-RAG, or Visual Retrieval-Augmented Generation, really comes into its own when dealing with documents containing both text and images. It's like giving your search engine a pair of glasses! Let's peek at some practical examples:

Knowledge Management: Imagine sifting through massive reports filled with charts, diagrams, and complex layouts. Vision-RAG can extract insights by understanding* these visuals, not just scanning for keywords. > Think about quickly finding "the Q3 sales chart from the 2024 report" with a simple visual query.

- Product Information Retrieval: E-commerce sites can benefit immensely. Users can search using images of products they like, and Vision-RAG finds visually similar items, even if the textual descriptions differ.

- For example, an AI could index a database of product photos, and then, using a product like PicFinderAI, return visually-similar results for users to browse.

- PicFinderAI is an AI-driven search tool that analyzes images to find similar visuals, excelling in scenarios where visual context is key.

- Technical Documentation: Forget scrolling endlessly through dense manuals. Vision-RAG can pinpoint specific components or instructions based on visual cues from the manual's illustrations.

- Patent Search: Identifying prior art becomes significantly easier. Instead of relying solely on textual claims, Vision-RAG can analyze visual features of existing patents to identify potential overlaps.

- E-commerce: Enhancing product discovery is also key.

Visual Discovery

Visual search takes center stage, offering a refreshing approach to finding what you need, even when words fail. By analyzing visual data and grounding it in textual context, Vision-RAG makes enterprise search not just powerful, but intuitive.Vision or Text? The RAG choice isn't always black and white.

Implementation Considerations: Choosing the Right Approach

Choosing between Text-RAG and Vision-RAG for your enterprise search architecture hinges on a few key factors, but don't worry; it's more like tweaking a finely tuned instrument than a shot in the dark. Let's break it down:

Data Types: This is the big one. Obviously, if your knowledge base is primarily images, Vision-RAG is the way to go. But consider: can you augment your visual data with descriptive text? Combining modalities might* be your path. Search Requirements: What are users actually* searching for? Is it object recognition ("Find all images with a specific logo") or semantic understanding ("Find all images related to a particular project phase")? This impacts the type of image encoders and embedding space you'll need.

- Performance Expectations: Vision processing is inherently more computationally intensive than text.

- Budget Constraints: Consider the infrastructure costs and the expertise required. Building a robust Vision-RAG pipeline means selecting appropriate image encoders, designing a multimodal embedding space, and optimizing for performance. A great choice is Midjourney, a popular AI art generator.

Building a Vision-RAG Pipeline

Think of your data like a library; you need a system to organize and retrieve it. The essential ingredients:

- Image Encoders: These convert images into numerical representations (embeddings) that capture their visual content. CLIP, for example, is popular for aligning text and image embeddings.

- Embedding Space Design: How will you combine visual and textual information? Careful design here impacts search accuracy.

- Optimization: Vision-RAG is resource-intensive. Consider techniques like quantization and caching to improve speed and reduce costs.

Trade-offs & Data Preprocessing

Accuracy vs. Speed vs. Cost: It's the classic engineering triangle. Higher accuracy often* means slower processing and higher costs. Find the balance that works for your needs.

- Data Preprocessing: Visual data is notoriously messy. Preprocessing steps like normalization, resizing, and data augmentation (e.g., rotating or cropping images) are vital for improving the quality of embeddings.

Here's a glimpse into the crystal ball, revealing where multimodal enterprise search is headed.

The Future of Multimodal Search: Emerging Trends and Research Directions

Convergence with Other Modalities

Vision-RAG isn't a lone wolf; its future involves harmonious integration with other AI modalities.Audio Integration: Imagine searching for a specific moment in a video by describing the sound*. Coupling visual context with audio cues (e.g., "when the engine starts," or "during the applause") enhances precision. Video-as-Context: Blending video analysis with Text-RAG opens new doors. Rather than just describing scenes, AI could understand relationships* between actions, objects, and people across time.

Unleashing the Power of Few-Shot and Zero-Shot Learning

Forget laborious training data; adaptability is the new currency.- Few-Shot Vision-RAG: This approach allows the system to learn from minimal examples, rapidly adapting to new visual concepts or document types with limited manual annotation.

The Rise of Multimodal Foundation Models

Multimodal foundation models are pre-trained on vast datasets containing diverse data types (text, images, audio, video). These models, like mega-brains, provide a foundational understanding of the world that can be fine-tuned for Vision-RAG, reducing the need for task-specific training.Ethical Considerations

As AI vision becomes more powerful, we can't ignore the ethical elephants in the room.- Bias Detection: Algorithms are reflections of their data. If the dataset is skewed, the Vision-RAG system will inherit those biases (e.g., misidentifying people from certain demographics).

- Privacy: Visual data can reveal sensitive information. Guarding personal Privacy is paramount.

- Responsible AI: Ethical AI principles must guide the development and deployment of Vision-RAG.

Conclusion: Embracing the Visual Revolution in Enterprise Search

Text-RAG brought us intelligent search, but Vision-RAG? That's next-level thinking, folks. The fundamental difference lies in their input: Text-RAG handles textual data, while Vision-RAG tackles images, videos, and other visual content. For enterprise search, this means going beyond keyword matches to understanding what's in your visuals.

Imagine searching for "manufacturing defect" and instantly pulling up images showing precisely that, instead of sifting through manuals.

Vision-RAG benefits are crystal clear:

- Enhanced Accuracy: Visual understanding provides more relevant results.

- Expanded Search Scope: Unlock insights hidden in images and videos.

- Improved Efficiency: Find information faster and more intuitively.

Keywords

Vision-RAG, Text-RAG, Multimodal RAG, Enterprise Search, Image embeddings, Text embeddings, Vector database, Semantic search, Multimodal fusion, AI Search, Visual Search, RAG architecture, AI in enterprise, Knowledge Retrieval, Generative AI

Hashtags

#VisionRAG #TextRAG #MultimodalAI #AISearch #EnterpriseSearch